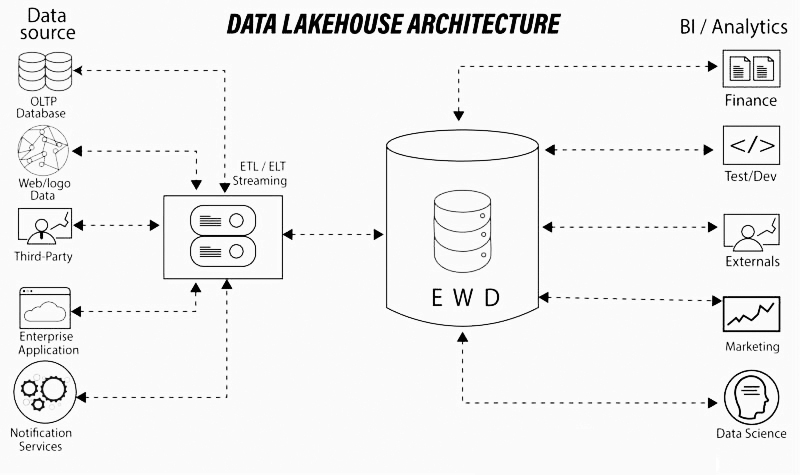

Data Lakehouses are enabled by a novel, open system design that implements comparable data structures and data management capabilities to those in a data warehouse, directly on affordable storage used for data lakes. Data teams can operate faster without having to access several systems by combining them into a single system. Data lakehouses guarantee that the most comprehensive and current data is available for initiatives including data science, machine learning, and business analytics.

Due in part to its capacity to store any form of data—internal, external, structured, or unstructured—a data lake structure tends to offer a number of advantages over other types of data repositories, such as data warehouses or data marts. The dearth of structure and greater flexibility in a data lake make it very simple to modify the repository’s models and queries and to reorganize the structure in response to shifting business requirements.

A data lake typically increases accessibility and data democratization in addition to the structural advantages. Although data scientists tend to be the main users of data lakes, anyone may efficiently and quickly extract insights from company data thanks to the repository. And in order to unleash its potential at its best, you must adhere to the best practices. Let’s see how you can achieve this.

Best Practices to Follow in Cloud Lakehouse Data Management

1. Develop a Data Governance Plan

Don’t put off thinking about data quality until after your data lake has been constructed. Every big data project should start with a carefully thought-out data governance framework in place to help ensure uniform data, shared processes and responsibilities.

Determine the business reasons for the data that needs to be properly regulated and the expected outcomes of this effort to get started. This plan will serve as the structure for your data governance system.

2. Give Preference to Open Formats and Open Interfaces

Interoperability and avoiding reliance on any one provider are both made possible through open interfaces. In the past, vendors created exclusive technologies and closed interfaces that restricted how businesses could store, use, and share data across applications.

You can construct for the future by building on open interfaces: it makes the data more durable and portable, enabling you to use it in additional applications and use cases. Also, It creates an ecosystem of collaborators whose technologies can be readily integrated into the Lakehouse platform by utilizing the open interfaces.

3. Scale for Data Volumes of the Future

There is a tremendous amount of data available, and it is expanding daily. You must take into account how your data lake will manage both ongoing and upcoming data initiatives. This calls for making sure you have enough developers and systems in place to effectively and affordably manage, control, and clean up hundreds or thousands of new data sources without degrading performance.

4. Monitor Business Results

If you don’t know what matters most to the company, you won’t be able to transform it. The key to defining the questions, use cases, analytics, data, and underlying architecture and technology needs for your data lake is understanding the organization’s main business activities and goals.

5. Grow the Data Team

Instead of being the sole responsibility of the IT team, data quality is increasingly becoming a company-wide strategic objective involving people from several departments. It makes sense to include business users in your data quality process because incorrect data invariably has an influence on business analysis.

By giving business analysts self-service access, you can help ensure that your data lake achieves some of its core goals because they have the domain knowledge and expertise to select the appropriate data for different business needs.

6. Future-proof your Infrastructure

Your data lake will probably need to run on various platforms because business requirements are continuously changing. Most businesses operate in a multi-cloud and hybrid infrastructure because various teams within the same firm frequently use multiple cloud providers depending on their demands and resources, as well as the existing on-prem infrastructure.

Businesses must carefully consider their alternatives of data warehouse and data lake mix in the cloud. The Lakehouse can assist you in supporting all your analytics use cases, from simple BI implementation to complex Data Scientist algorithms and AI/ML, plan to start small on an architecture that will allow you to scale quickly and consistently.

Other key aspects that Should be Considered

1. Discover and Understand the Data

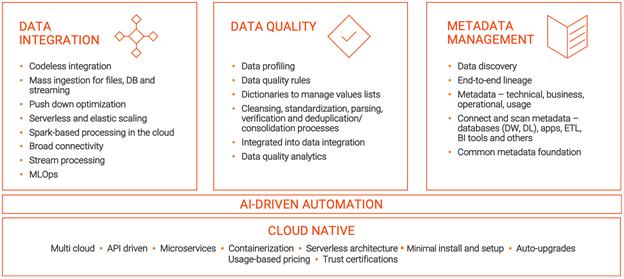

A metadata management tool gives various groups of data citizens a common language to communicate and effectively manage their activities. For instance, business users work with organizational metrics and policies. Glossary terms and business rules are managed by business data stewards together with other business metadata. They offer assistance for producing and utilizing metadata.

Technical metadata is managed by system data stewards, who also ensure that systems are in line with business regulations. Data architects use logical and conceptual frameworks to link technical and business metadata. They account for more than half of all metadata consumers and inquire about things like the recommended data structure for storing customer information.

Talk to our specialists to leverage the power of cloud lakehouse data management. Triade, is an official Informatica partner. With the help of our cloud lakehouse data management offering, your business will have access to world-class services, the best people, and the best products to promote innovation, speed time to market, and democratize data across all of your functional areas.

Our clients strongly value our expertise and vendor-neutral strategies. Our staff has extensive knowledge of many different cloud platforms, including Snowflake, Databricks Delta Lake, Microsoft Azure, Google Cloud Platform, and Amazon Web Services. To fast track the realization of your cloud data journey, get in touch with us.

Deployment and testing are the two components of development that are most essential. Regularly creating and testing the programme is known as continuous integration (CI). Contrarily, continuous delivery (CD) refers to continuously releasing repository updates to the production environment, uploading code to the repository, and automating bug testing. Big data organizations are increasingly using the CI/CD approach. It aids continuous analytics, streamlines data-related procedures, and helps them plan better software updates.

Talk to our specialists to leverage the power of cloud lakehouse data management. Triade, is an official Informatica partner. With the help of our cloud lakehouse data management offering, your business will have access to world-class services, the best people, and the best products to promote innovation, speed time to market, and democratize data across all of your functional areas.

Our clients strongly value our expertise and vendor-neutral strategies. Our staff has extensive knowledge of many different cloud platforms, including Snowflake, Databricks Delta Lake, Microsoft Azure, Google Cloud Platform, and Amazon Web Services. To fast track the realization of your cloud data journey, get in touch with us.

2. Use Metadata-Driven Approach to Build Your Data Pipelines

Using the example of the Ingestion Step, the use of an intelligent mass ingestion solution driven by metadata can accelerate your project by doing both initial load and delta loads as well as automatically update itself and your target schemas when changes happen in your source, keeping your first layer (raw) in sync in both data and structure with your source.

3. Provision Data Using DevOPS Practices

Deployment and testing are the two components of development that are most essential. Regularly creating and testing the programme is known as continuous integration (CI). Contrarily, continuous delivery (CD) refers to continuously releasing repository updates to the production environment, uploading code to the repository, and automating bug testing. Big data organizations are increasingly using the CI/CD approach. It aids continuous analytics, streamlines data-related procedures, and helps them plan better software updates.

Talk to our specialists to leverage the power of cloud lakehouse data management. Triade, is an official Informatica partner. With the help of our cloud lakehouse data management offering, your business will have access to world-class services, the best people, and the best products to promote innovation, speed time to market, and democratize data across all of your functional areas.

Our clients strongly value our expertise and vendor-neutral strategies. Our staff has extensive knowledge of many different cloud platforms, including Snowflake, Databricks Delta Lake, Microsoft Azure, Google Cloud Platform, and Amazon Web Services. To fast track the realization of your cloud data journey, get in touch with us.